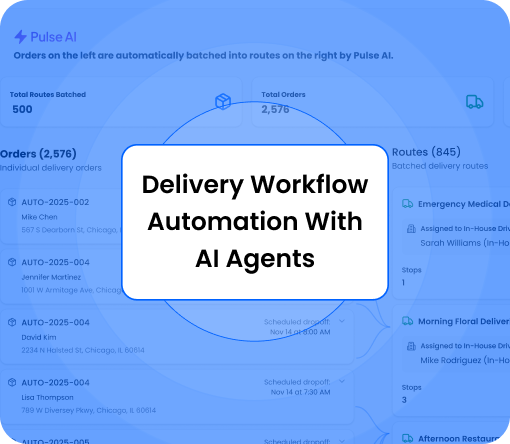

Customizing delivery workflows should make operations smoother, not slower. But as soon as a delivery program expands, more zones, more locations, more order types, workflow changes can start to feel risky. One rule tweak improves one region and breaks another. A new provider unlocks coverage but introduces different status updates, different pickup behavior, and different edge cases.

The real challenge isn’t flexibility. It’s controlled flexibility: workflows that adapt to different service levels and fulfillment patterns without creating a different process for every scenario. That’s what protects speed.

This guide breaks down how scalable delivery workflows are designed, where customization usually breaks down, and how automation and AI agents help teams keep reliability high as complexity grows.

Why flexible workflows matter as delivery networks grow

Growth isn’t just higher volume. It’s higher variation. The same delivery operation might need to support scheduled windows in one market, same-day in another, and multi-stop routing for a third. At the same time, customer expectations don’t adjust downward just because the network got more complex. Tracking should still be accurate. ETAs should still mean something. Exceptions shouldn’t require a dozen manual escalations.

The teams that scale cleanly don’t avoid complexity, they design workflows that handle it consistently. Flexibility matters most when it’s applied in the places where delivery networks naturally vary:

- time windows and cutoff rules

- zone and distance constraints

- provider coverage and capacity

- order handling requirements and exceptions

When that variation is handled through rules and automation (instead of human memory), speed stays predictable.

The workflow foundations that keep speed intact

Workflow customization goes off the rails when changes are layered on as one-off exceptions. The strongest delivery programs have a consistent “spine,” then allow controlled variation inside it.

A consistent workflow spine

A scalable workflow follows the same stages every time:

Order intake → validation → dispatch decision → execution & tracking → exceptions & recovery → completion & reporting

That doesn’t mean everything is identical. It means customization happens within the flow, instead of creating side processes that bypass it.

Readable rules that don’t require a specialist

If workflow logic is hard to understand, it becomes hard to maintain. A good rules layer is readable by operations teams and easy to update without fear.

Examples of rule logic that stays clear:

- Orders with a delivery window are dispatched based on window start and ready time

- High-value orders require specific proof of delivery steps

- Long-distance deliveries restrict provider options to those that serve that coverage model

- Certain SKUs route to specific delivery modes

Consistent statuses and a single source of truth

Workflow speed breaks when systems disagree on what’s happening. If a provider marks something “delivered” but the originating order system still thinks it’s “in transit,” the result is support tickets, incorrect notifications, and messy reporting.

A scalable setup normalizes statuses and maps provider updates into a shared internal status model so tracking, exceptions, and reporting stay consistent.

Where workflow customization usually breaks down

Most delivery teams don’t struggle because they’re missing ideas. They struggle because the system design turns basic decisions into recurring manual work.

Tool sprawl instead of integration

A common failure pattern looks like this: a new location launches, something doesn’t map cleanly, and a spreadsheet is created “just for now.” Then a second spreadsheet appears for exceptions. Then, a team starts checking provider portals manually to validate statuses. Before long, the workflow still exists, but it’s surrounded by manual scaffolding.

That scaffolding is what slows things down. It creates extra handoffs, inconsistent data, and “tribal knowledge” workflows that can’t scale.

Manual decision-making becomes the bottleneck

Manual work tends to collect in the same places:

- provider selection

- dispatch timing decisions

- exception handling

- customer communications during disruptions

At low volume, that’s manageable. At scale, it becomes the limiter. Even small delays in decision-making compound quickly across zones and locations.

Metrics become noisy, so improvements become guesswork

Workflow improvements should be measurable. When teams track performance differently by region or provider, it becomes hard to tell whether a workflow change worked or whether conditions just happened to be calmer that week.

Consistency matters here: shared definitions for on-time performance, failure types, and exception categories make the results of workflow customization trackable and repeatable.

The decisions worth automating first

Automation isn’t just about reducing work. It’s how workflows stay reliable when the network becomes dynamic. The best approach is to automate the decisions that most often slow teams down or create inconsistency.

Provider selection and dispatch logic

Provider selection shouldn’t depend on someone remembering which provider is best in a zone on a given day. It should be driven by policy, what matters most for that order type and that service commitment.

Dispatch policy commonly needs to account for:

- coverage and service availability

- time windows and cutoffs

- capacity and acceptance patterns

- performance indicators (success rate, on-time performance, exception frequency)

- cost after service requirements are met

Routing and batching

Once volume increases, batching and route efficiency stop being optional. They become central to protecting both cost and speed, especially for multi-stop delivery networks.

The difference between “batching exists” and “batching works” usually comes down to whether batching rules respect operational constraints (time windows, handling requirements, stop limits, vehicle constraints).

Exceptions and recovery

Exceptions are where delivery programs lose speed and lose trust. If exception handling is treated as a manual-only activity, support becomes the workflow.

The scalable approach: define exception types, define triggers, define the first-best action, and only escalate when the system reaches a policy boundary.

Where AI and agents fit in workflow customization

Rules and automation create structure. AI and agents add responsiveness when conditions change faster than humans can monitor.

Agents work best when they’re scoped to specific jobs, not “AI for everything,” but specialized decision support and execution for key workflow stages.

Agent job 1: Monitor and detect early risk

Most late deliveries don’t start late. They start with early signals: pickup dwell time increases, acceptance delays, stalled GPS movement, repeated attempt patterns, or abnormal route behavior.

A monitoring agent continuously watches those signals and flags orders trending toward risk before a delivery promise is missed. That’s what creates time to act.

What this looks like in practice:

A scheduled order is still “accepted,” but the pickup hasn’t progressed, and GPS movement is inconsistent. Instead of discovering the problem when the order is already late, the workflow flags it early and routes it into a predefined recovery path.

Agent job 2: Decide the next best action within policy

Once an order is at risk, the right response depends on context:

- how strict the delivery window is

- whether alternates exist nearby

- whether reroute is allowed by policy

- what the downstream impact is (customer comms, cost, SLA impact)

AI decisioning helps prioritize and recommend actions faster, while still honoring operational guardrails.

Agent job 3: Execute quickly and consistently

Agents become valuable when they can take action, not just generate alerts. Execution can include triggering reroute logic, reassigning to backup providers, updating ETAs, and initiating notifications based on confirmed status changes.

Agents should reduce manual intervention without removing control. Rules define boundaries. Agents move faster inside those boundaries.

Workflow patterns that scale across industries

Most scalable delivery programs rely on a small set of patterns that can be configured by zone, location, and order type without turning every variation into a custom build.

Two-lane fulfillment

Instead of building a different workflow for every promise type, run two lanes:

- ASAP / same-day

- Scheduled / windowed

Orders route into the correct lane based on simple inputs (time window, customer type, SKU category, location readiness), then the lane applies consistent rules.

Policy-based provider selection

Treat provider selection as a policy, not a habit. A simple hierarchy keeps the decision model consistent:

- must meet service requirement (window, coverage)

- prefer reliability inputs (on-time performance, exception rate)

- use cost optimization when service requirements are already satisfied

This avoids “cheap but late” outcomes that cost more in support and customer churn.

Exception playbooks by type

Exceptions repeat. Recovery should too. Define playbooks for the top failure modes and tie them to triggers and actions.

A simple playbook structure:

- trigger (what happens)

- action (what the workflow does first)

- comms (who gets notified, and when)

- escalation (when humans step in)

Common pitfalls + checklist

- Unclear source of truth for order changes: When multiple systems can edit address updates, cancellations, or time windows, workflow logic becomes inconsistent.

- Status mapping gaps: If provider statuses don’t map cleanly into a shared internal status model, tracking and notifications drift.

- Duplicate events creating double-dispatch: If the same event is processed more than once, an order can be dispatched twice or updated incorrectly.

- Exceptions treated as “manual only”: When exception handling lives outside the workflow, support becomes the workflow.

- Too many rules without priority order: Without rule hierarchy, edge cases override standard logic unintentionally.

- No monitoring for workflow health: Failed webhooks, dispatch latency, provider outages, and spike patterns need monitoring or workflows silently degrade.

Workflow customization checklist

- Order intake is consistent across sources (POS, e-commerce, OMS)

- Normalized statuses are defined and mapped across providers

- Rules are readable and tied to outcomes (speed, reliability, cost)

- Provider selection policy exists with defined fallback order

- Batching and routing rules are defined by lane (ASAP vs scheduled)

- Exception playbooks exist for top failure modes

- Customer notifications are triggered by confirmed status changes

- Monitoring is set up for webhook failures, dispatch latency, and provider issues

- Reporting is consistent across zones and locations (on-time, failure, exception rate, cost)

- Agents have scoped responsibilities (monitor, decide, execute) with guardrails

FAQ

What is a delivery workflow?

A delivery workflow is the end-to-end process that moves an order from creation to completion, including dispatch decisions, tracking updates, exception handling, and reporting.

What makes a delivery workflow scalable?

A scalable workflow uses a consistent flow, normalized statuses, readable rules, automated decisioning, and repeatable exception playbooks so new zones, locations, and providers can be added without rebuilding operations.

How do AI agents improve delivery workflows?

AI agents help by monitoring delivery signals, detecting risk early, recommending or executing the next best action within defined policies, and reducing manual intervention during exceptions.

Conclusion

Custom delivery workflows don’t need to trade speed for control. The delivery programs that scale cleanly standardize the workflow spine, use readable rules to handle variation, and automate the decisions that typically slow teams down, such as provider selection, batching, and recovery.

AI and agents add leverage when delivery networks become too dynamic for manual oversight. With clear guardrails, agents can monitor progress, flag risk early, and execute the right action quickly, protecting reliability as complexity grows.

The outcome is straightforward: flexible workflows that scale, fewer operational surprises, and delivery performance that stays consistent as the network expands.

.png)